CSSE at SEAA 2025

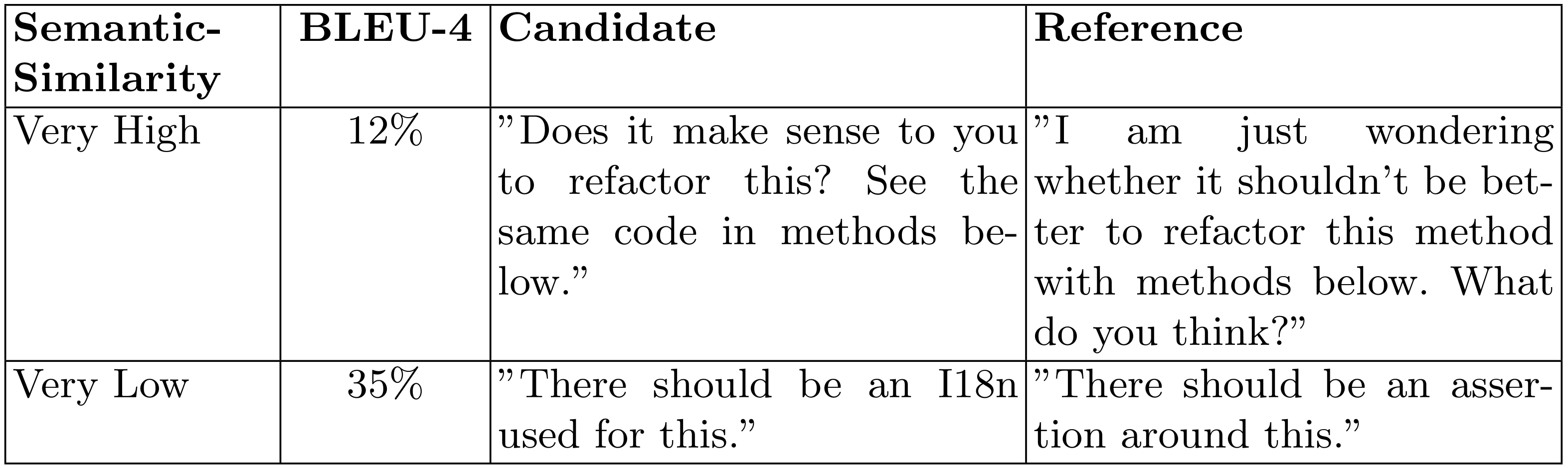

Our paper, “Empirical Analysis of OpenAI Embeddings for Semantic Code Review Comment Similarity” has been accepted for presentation at SEAA 2025. This work tackles a critical challenge in code review automation: evaluating the quality of generated review comments, thereby highlighting the need for rigorous empirical methods in understanding LLM-driven tooling. By comparing traditional lexical metrics like BLEU with OpenAI embedding–based measures, our study demonstrates that embedding similarity aligns far more closely with human judgments. We believe this evidence-backed approach constitutes an important step in evaluating the effectiveness of automated code review models.